Imagine sculpting a block of marble. At first, it looks bulky and unrefined, but as the sculptor carefully chips away the excess, the true figure emerges—lighter, leaner, and more elegant. Neural networks undergo a similar transformation through network pruning, where unnecessary connections are removed to create models that are smaller, faster, and more efficient without losing their essence.

Rather than overwhelming devices with bloated architectures, pruning carves networks into streamlined forms ready for real-world deployment.

Why Pruning Matters in Deep Learning

Modern deep learning models can contain millions, sometimes billions, of parameters, while this power is impressive, it comes with costs—massive memory requirements, longer inference times, and high energy consumption. Pruning reduces these burdens by trimming connections that contribute little to final predictions.

It’s the difference between carrying an overstuffed suitcase and one packed with only essentials. Both get you to your destination, but the lighter one makes the journey smoother.

Students in a data science course in Pune often discover how pruning allows even modest hardware to run powerful models—demonstrating how efficiency is just as critical as accuracy in practical applications.

Magnitude-Based Pruning: Cutting the Smallest Branches.

One of the most common techniques is magnitude-based pruning. Here, weights with the smallest absolute values are considered least important and removed. The logic is simple: if a connection contributes very little, cutting it won’t significantly affect performance.

Think of pruning a tree. Tiny, weak branches that don’t bear fruit are clipped to allow stronger branches to thrive. In neural networks, this strategy leads to smaller models that retain most of their predictive power while requiring fewer resources.

Learners in a data scientist course often experiment with magnitude-based pruning, observing how accuracy remains surprisingly stable even after substantial reductions in parameters.

Structured Pruning: Clearing Entire Pathways.

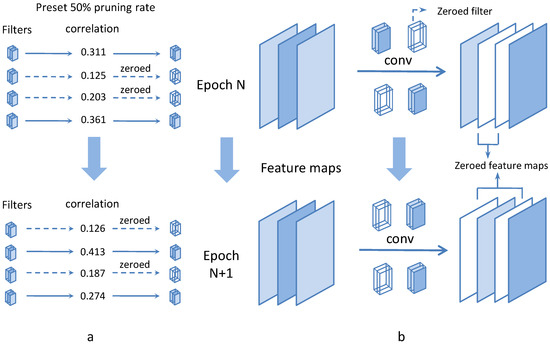

While magnitude pruning removes individual weights, structured pruning targets entire neurons, filters, or layers. This approach creates models that not only shrink in size but also become faster and easier to deploy on hardware.

It’s like urban planning: instead of just trimming hedges, entire roads are redesigned to make traffic flow more efficient. In the same way, structured pruning ensures that models aren’t just smaller but better optimised for performance.

Practical exposure during a data science course in Pune often includes applying structured pruning to convolutional networks—showing learners how a slimmer architecture can still handle complex image recognition tasks.

Dynamic and Iterative Pruning: Pruning with Precision

Pruning doesn’t always happen in a single sweep. Iterative pruning removes connections gradually during training, giving the model time to adapt after each cut. Dynamic pruning takes it further, adjusting which connections to remove as learning progresses.

This is akin to tailoring a suit. The first fitting removes obvious excess fabric, but further adjustments refine the fit until it feels perfect. Similarly, iterative pruning fine-tunes the network until only the most valuable connections remain.

During advanced labs in a data scientist course, students learn how these methods prevent performance drops and ensure the pruned model maintains accuracy across real-world scenarios.

Challenges and Trade-Offs

Pruning isn’t without its pitfalls. Removing too many connections too aggressively can cripple accuracy. Additionally, while pruned models are smaller, they sometimes require specialised libraries or hardware to realise efficiency gains.

It’s a balancing act: prune enough to achieve efficiency, but not so much that the model becomes ineffective. Like any craft, it requires skill, patience, and a clear understanding of the system being shaped.

Conclusion:

Network pruning is the art of refinement in deep learning—removing unnecessary connections to reveal models that are smaller, faster, and more efficient. From magnitude-based trimming to structured and iterative methods, pruning ensures that neural networks remain both powerful and practical.

As deep learning moves from research labs to real-world devices, the ability to create learner models becomes essential. Professionals who master pruning techniques not only build accurate systems but also scalable ones that thrive under constraints.

Business Name: ExcelR – Data Science, Data Analytics Course Training in Pune

Address: 101 A ,1st Floor, Siddh Icon, Baner Rd, opposite Lane To Royal Enfield Showroom, beside Asian Box Restaurant, Baner, Pune, Maharashtra 411045

Phone Number: 098809 13504

Email Id: enquiry@excelr.com